Discover your next favorite thing. Product Hunt surfaces the best new products, every day. It's a place for product-loving enthusiasts to share and geek out about the latest mobile apps, websites, hardware projects, and tech creations.

Arq Backup Vs Backblaze

In this article I share bit of what I’ve learned in putting together a backup anddata synchronization system for myself and my family. My goal is simple enough tostate generally: I want to make sure all of my notes, documents, photos and videosare backed up and available from anywhere. Diving into the details of this goal iswhere things get complex. Happily, I think the end result is simple enough for othersto emulate.

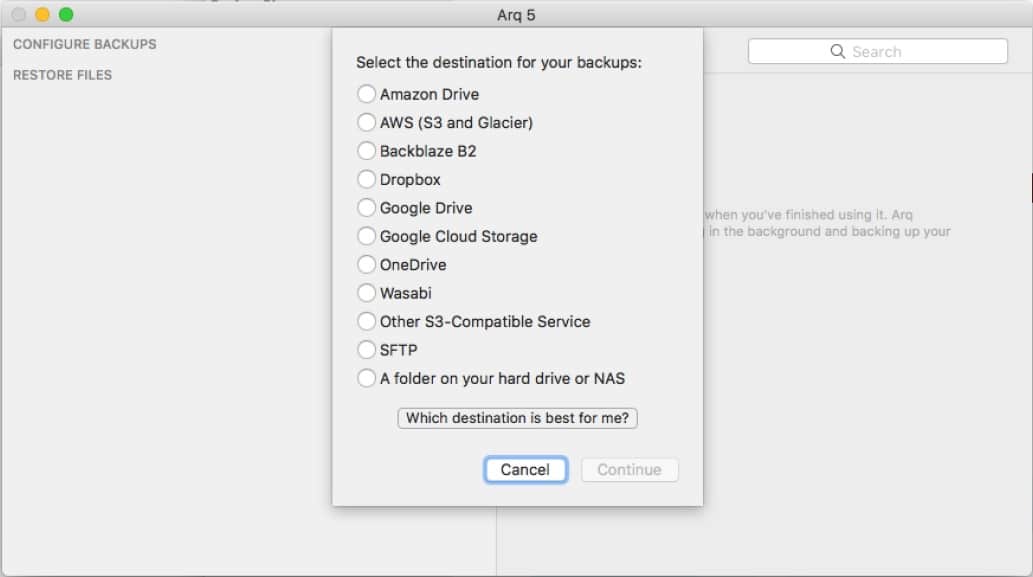

- Edit: Arq can backup to Dropbox like it does Backblaze B2 or Amazon S3. It uses a folder on Dropbox that isn't sync'd to any computer. I've got 1 TB of space and only legitimately use a 150 GB, so.

- Arq (my favourite) is most commonly used as a backup application with whatever storage you choose to use - e.g. AWS, Google, etc. I use Arq with cloud storage from both OneDrive (part of Microsoft Office subscription) and Backblaze's B2, though is unnecessary to use 2 storage providers.

- However Backblaze does offer a service called “B2” which is similar to but cheaper than S3. I selected Arq Backup with Backlaze B2 as the offsite, geo-redundant storage. B2 is cheap, fast, and easy to use, and Arq is cheap enough, light-weight, and easy to configure and manage on all my systems.

- Arq Backup, a long-time favorite of Backblaze customers for its ease-of-use and powerful features, takes full advantage of the Fireball. Arq has an excellent how-to post on creating the initial backup onto the Fireball and then transferring the backup target to Backblaze. Backblaze invites you to take a look!

Gathering the requirements

Teasing apart my goal a bit more, I refined it to the following requirements:

- Every document from every device should be backed up to redundant, cloud storage.Losing a device should not mean losing data, nor should losing all of my devices atthe same.

- I’d like immediate access to some documents from all devices with transparentsynchronization. Some documents, like in-progress notes and drafts and to do listsneed to be up to date at all times on all devices.

- Photos are a bit special. I’d like the ability to view and search through everyphoto and video in my entire library from desktop and mobile devices. In addition,many photos need to be shared with others.

- I’d like for my personal data to be encrypted at rest and in transport.

- I’m lazy and forgetful: Whatever system I put together should be easy to maintainand mostly invisible.

- I need to store about 100GB of documents and 500GB of media.

Media is special

The special case of photos caught my attention, and I thought a bit more about how Itake, organize, access, and share pictures. This led to a few more requirements:

- An easy, consistent workflow is important to me. Everything captured from either myphone or DSLR should end up in the same places.

- Edits to photos and meta-data on any platform should be synchronized and backed up.

- As mentioned above, easily sharing photos with friends and family is important tome, but in general my pictures are private.

- I favor searching over ongoing organization. Searching for places, dates, people,and tags should be easy to do.

- I usually take pictures in RAW format, and edit to produce variants of theoriginal. Having a photo systems that understands this is important to me.

Finally, I am our family’s IT administrator. Some of this project will support theirneeds, so the solutions need to be relatively simple and inexpensive.

Considering the options

Now that I understand the problem I’m trying to solve, it’s time to consider thetools available to construct a solution.

Assets

I have some assets already available that could potentially form part of a solution:

- A Mac mini running at my home that is always on.

- An older but perfectly good Drobo NAS at home.

- An Amazon Prime account.

- An offsite, small virtual private server at buyvm.net.

(spoiler: my end solution uses only the first of these).

Services

For keeping a set of folders of data in sync across all of my devices, I looked atGoogle Drive, Dropbox,and Syncthing. For backup software and services, Ievaluated Backblaze,Carbonite, and Arq Backupusing Backblaze B2 cloud storage.Finally, I looked at various photo services, includingFlickr, GooglePhotos, Smugmug,and Amazon PrimePhotos.

Data Synchronization

Both Google Drive and Dropbox are easy to setup and use. I already use Dropbox forwork, so adding it for my personal documents is trivial. The same can generally besaid for Google Drive. Both of these services also have excellent mobile applicationsfor accessing the files. However both fail my encrypted at rest requirements. Thereare tools available to build encrypted filesystems on top of these services, but theycomplicate the final product. In the end, I chose to use Syncthing and not store mydocuments in a cloud (though they are backed up to the cloud). Syncthing lets mecontrol the storage – I use my Mac mini server as the “cloud,” and it’s contents arebacked up continuously to a cloud service. Syncthing can be a bid tricky to setup andmanage – certainly more so than Dropbox or Google Drive. If it proves too complex,and encrypted storage is still important, I would look toSpiderOak

Backups

Backblaze’s backup solution seemed great at first analysis, but the costs becomeprohibitive when scaled to my entire family. However Backblaze does offer a servicecalled “B2” which is similar to but cheaper than S3. I selected Arq Backup withBacklaze B2 as the offsite, geo-redundant storage. B2 is cheap, fast, and easy touse, and Arq is cheap enough, light-weight, and easy to configure and manage on allmy systems. Most importantly, it is easy to manage on all of my family computers, andcan be configured to be bandwidth-friendly.

My annual backup bill for offsite backup of all of my data went from $120/yr withCrashPlan to less than $50 with Backblaze B2.

Media

All of my media is stored on my Drobo NAS at home, managed byMylio, which I like, but don’t love as a photo managementapplication. Ms remote desktop connection manager 2 7. The photos and metadata are completely backed up to Backblaze B2 asdescribed above. Mylio is installed on all of my devices (include my phone), andkeeps the various systems in sync – all photos end up in original quality on myserver, and are accessible from my laptop and phone as needed.

Mylio is adequate for face detection and geolocation cataloging, but Google Photos isjust too impressive to ignore. I’m using the Google Photos Backup tool from my servermac mini to upload all of my photos to a private Google account. This violates myencrypted-at-rest requirement, but I can live with that for now. Using GooglePhotos, I get amazing search, and good limited sharing.

Putting it all together

My daughter was kind enough to draw a diagram of the whole system:

Mylio takes care of photo syncing, and Syncthing handles all other documents.Arq+Backblase B2 handle offsite backups, while Google Photos enables media sharingand searching. I’m not sure what role the duck plays, but it seems to be important.

Think about your backup plan. Do you have one? Ideally you should be following something similar to the 3-2-1 backup strategy of having 3 copies of data: two local and one offsite. I personally follow a modified strategy of 2 local and 2 offsite. One offsite is on a server that I own in another state, and the other is stored in Amazon S3.

For my backups, I use Arq Backup. The strategy described below will work with other solutions too, but note that my experience is solely with Arq.

Start ps4 update remotely. Using Remote Play. Control your PS4™ system remotely with Remote Play. For example, you can play games for a PS4™ system from a computer in another room, or from your smartphone while you’re away from home. For details about what devices you can connect from and connection methods, visit the PS Remote Play website. PS Remote Play is a free to use feature on all PS4 and PS5 consoles. All you need is: Your PS5 or PS5 Digital Edition, PS4 or PS4 Pro 2 connected to your home wired broadband network. A compatible device – also connected to your network. The free PS Remote Play app. A DUALSHOCK 4 wireless controller or DualSense controller 3. You can register one PS4™ system on your system for remote play. Log in to the PS4™ system. When logging in to the PS4™ system, select the user that is linked to the same Sony Entertainment Network account as your system. On your system, select (PS4 Link) Start Remote Play. When registration is complete, the PS4™ system's screen is displayed on your system. Playing remotely 1. With Remote Play, you can control your PlayStation® console remotely wherever you have a high-speed internet connection. Using the PS Remote Play app, you can control your PlayStation®5 console or PlayStation®4 console from a device in a different location. For example, you can use a computer in another room or a smartphone. when you’re out to enjoy PS5™ and PS4™ games.

Loads of storage providers claim to be the cheapest. Backblaze B2 offers storage for $0.005 per GB/Month. Wasabi similarly offers pricing at $0.0059 per GB/Month (minimum 1TB @ $5.99). Comparatively, Amazon S3 looks ridiculously pricey at $0.023 per GB/Month. That’s over 4x as expensive as the other options!

Let’s look at the cost for 1TB of data per month:

| Offering | Price Per GB | Total Price |

|---|---|---|

| Backblaze B2 | $0.005 | $5.00 |

| Wasabi | $0.0059 | $5.99 |

| Amazon S3 | $0.023 | $23.00 |

But wait, there’s another option. In comes S3 Glacier Deep Archive (S3 GDA) to the rescue! Glacier Deep Archive is a cheaper tier of S3 that is designed for the same durability as regular S3, but at a much lower price.

Let’s look again, this time considering S3 GDA:

| Offering | Price Per GB | Total Price |

|---|---|---|

| Backblaze B2 | $0.005 | $5.00 |

| Wasabi | $0.0059 | $5.99 |

| Amazon S3 | $0.023 | $23.00 |

| Amazon S3 Glacier Deep Archive | $0.00099 | $0.99 |

99c per month?!? Too good to be true? A little, yeah. There’s a few key disadvantages:

- You pay for putting the data into S3 GDA

- You pay a little overhead for each object (file) in S3 GDA

- You pay to retrieve your data in case of emergency

- You can’t get your data immediately (up to 12 hours to retrieve)

In AWS, cost is one of the hardest things to factor. You pay for everything from storage to network traffic to API calls. It’s usually easy to get a rough estimate of how much you’ll spend on AWS, but rarely easy to nail it down precisely. That’s why, when exploring using Glacier Deep Archive, I did some rough calculations on API cost plus storage cost, and then just did it.

All costs pulled 2/16/21 from https://aws.amazon.com/s3/pricing

Let’s break down what you pay for, using my actual usage of 487GB as an example.

tl;dr

487GB will cost you:

- $4.90 one time to get into AWS

- $0.50 per month for storage

- $0.20 additional per month if you do incremental backups once daily

- $62.28 if you ever have to retrieve and download it

Data storage

Glacier Deep Archive charges $0.00099/GB/Month. 487GB is 487 * 0.00099 = $0.48213 for 30 days, right? No! See the ** after the pricing on the page?

For each object that is stored in S3 Glacier or S3 Glacier Deep Archive, Amazon S3 adds 40 KB of chargeable overhead for metadata, with 8KB charged at S3 Standard rates and 32 KB charged at S3 Glacier or S3 Deep Archive rates.(Source: S3 Pricing Page)

How big is each object? How many objects will I have? Who knows. I ignored that part of the calculation. Lucky for you, I’ve implemented this, so I have that number.

Not all your data goes into Glacier Deep Archive. Arq stores metadata about your archive, so that it doesn’t ever have to read the files from Glacier Deep Archive (which takes up to 12 hours and costs money). You’ll see some Standard storage below as well.

| Storage Amount | Tier | Tier Cost (GB) | Total Cost |

|---|---|---|---|

| 487GB | Glacier Deep Archive | $0.00099 | $0.48213 |

| 2.9GB | Overhead - GDA | $0.00099 | $0.002871 |

| 724MB | Overhead - Standard | $0.023 | $0.01656 |

Total cost? $0.501561 per month, or 50c. Pretty cheap for storing almost half a terabyte. But wait! There’s more.

S3 APIs

Almost all AWS APIs have a cost to them. Glacier Deep Archive has one of the steeper costs of all the APIs. It costs $0.05 per 1,000 object PUT/LIST requests. S3 Standard costs $0.005 per 1,000 requests. That’s a 10x higher cost for GDA than for Standard.

It cost me about $4.40, per inspection of my bill. The API cost is both a one-time cost and an ongoing cost, because you are doing incremental backups daily. In my case, I pay about $0.20 per month for incremental backups. My backup plan is scheduled to run only once a day.

Spark dataframe cheat sheet. Data Science in Spark with Sparklyr:: CHEAT SHEET Intro Using sparklyr. Download a Spark DataFrame to an R DataFrame Create an R package that calls the full Spark API & provide interfaces to Spark packages. Sparkconnection Connection between R and the Spark shell process. Spark Dataframe Cheat Sheet.py # A simple cheat sheet of Spark Dataframe syntax # Current for Spark 1.6.1 # import statements: from pyspark. Sql import SQLContext: from pyspark. Types import. from pyspark. Functions import. #creating dataframes. Spark Dataframe cheat sheet. Leave a Comment / Coding / By Anindya Naskar. If you are working in spark by using any language like Pyspark, Scala, SparkR or SQL, you need to make your hands dirty with Hive.In this tutorial I will show you. This PySpark SQL cheat sheet covers the basics of working with the Apache Spark DataFrames in Python: from initializing the SparkSession to creating DataFrames, inspecting the data, handling duplicate values, querying, adding, updating or removing.

That brings monthly cost to ~$0.70 for ~500GB and total one-time cost to ~$4.70.

Early Delete and Retrieval

Two “gotchas” that you have to watch out for with Glacier Deep Archive are early deletes and retrieval. If you aren’t careful, you can rack up a large bill.

There is a minimum duration of 180 days for each object that enters into Glacier Deep Archive. Even if you store an object for a few seconds, and delete it, you’ll still be charged for 180 days worth of storage. Thus, you should be very careful to only put data into GDA that is going to remain stable.

Retrieval is also costly. While I haven’t yet retrieved my data set, I can get a rough calculation on the cost of it. If we take the number of PUT requests I made and assume that I would make an equal number of GET requests to retrieve it, then we can understand how much it will cost to retrieve.

- Total put requests:

$4.40 / 0.05 * 1000 = 88,000(5c per thousand) - GET object cost

(88,000 / 1000 * 0.10) + (487 * .02) = $18.54(10c per thousand plus 2c per GB) - Plus network data transfer from AWS to my machine

(487 - 1) * 0.09 = $43.74(9c per GB except first GB is free)

Arq Vs Backblaze

Total restore cost? $43.74 + $18.54 = $62.28

When S3 Standard is compared to other services like Backblaze or Wasabi, it is always shown to be more expensive. Certainly, others have simpler cost models: flat costing for storage and retrieval. However, the flexibility of management that AWS offers is unparalleled. The total cost of Amazon S3 Glacier Deep Archive, so long as you don’t have to restore, is almost always going to be far less than that of either Backblaze or Wasabi. At the same time, you gain access to the vast ecosystem of resources that AWS has to offer.

Arq Backup Backblaze B2

In the next article, I’ll discuss how to actually use Arq Backup with S3 Glacier Deep Archive. I’ll demonstrate the use HashiCorp Terraform to create everything using “code.”